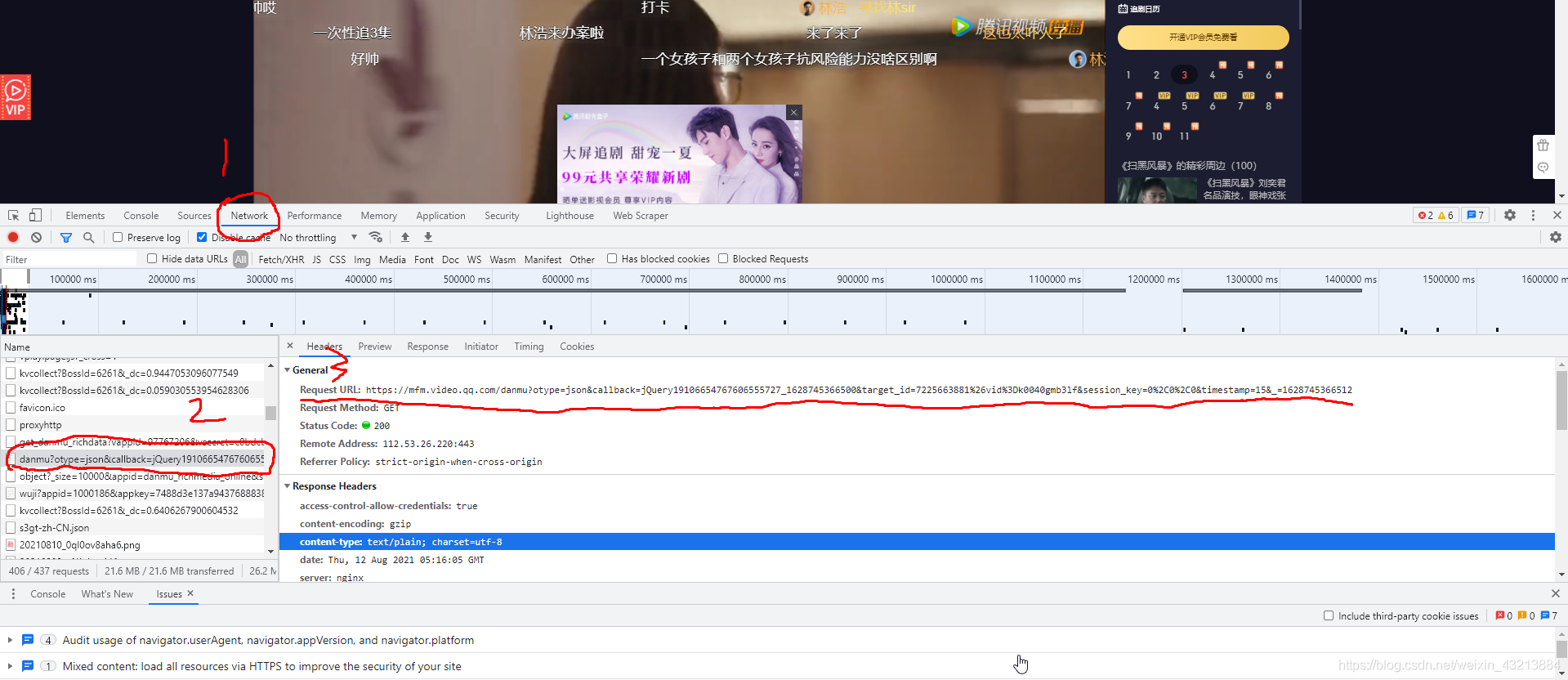

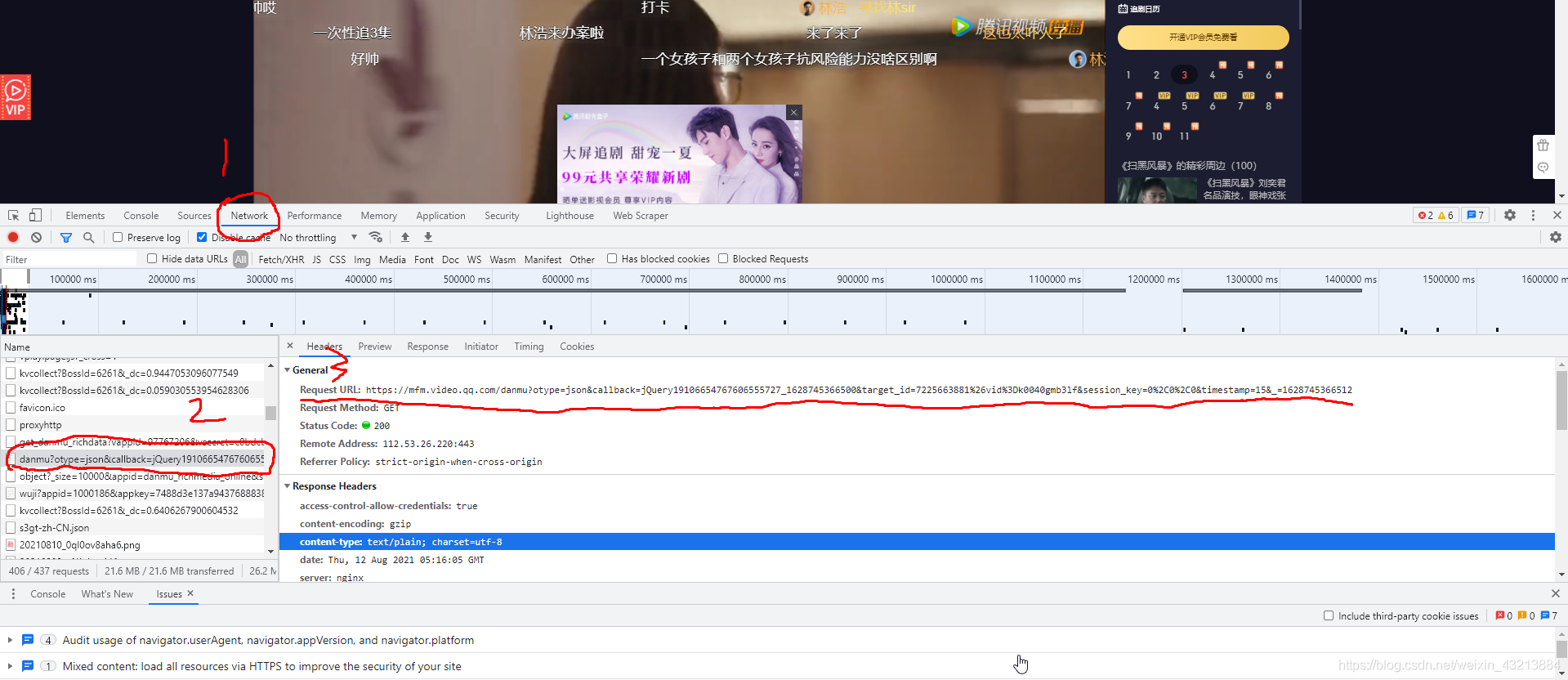

关键是找到弹幕的URL

等待,广告完成,按下F12

在Ctrl+R刷新

代码如下

import requests

import json

import time

import pandas as pd

df = pd.DataFrame()

for page in range(15, 45, 30):

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'}

url = 'https://mfm.video.qq.com/danmu?otype=json×tamp={}&target_id=5938032297%26vid%3Dx0034hxucmw&count=80'.format(page)

print("正在提取第" + str(page) + "页")

html = requests.get(url,headers = headers)

print(html)

bs = json.loads(html.text.encode('utf-8').decode('utf-8'),strict = False)

time.sleep(1)

print(bs)

for i in bs['comments']:

content = i['content']

upcount = i['upcount']

user_degree =i['uservip_degree']

timepoint = i['timepoint']

comment_id = i['commentid']

cache = pd.DataFrame({'弹幕':[content],'会员等级':[user_degree],

'发布时间':[timepoint],'弹幕点赞':[upcount],'弹幕id':[comment_id]})

df = pd.concat([df,cache])

df.to_csv('tengxun_danmu.csv',encoding = 'utf-8-sig')

print(df.shape)

cs