Spark大数据分析与实战:HDFS文件操作

一、安装Hadoop和Spark

具体的安装过程在我以前的博客里面有,大家可以通过以下链接进入操作

Linux基础环境搭建(CentOS7)- 安装Hadoop

Linux基础环境搭建(CentOS7)- 安装Scala和Spark

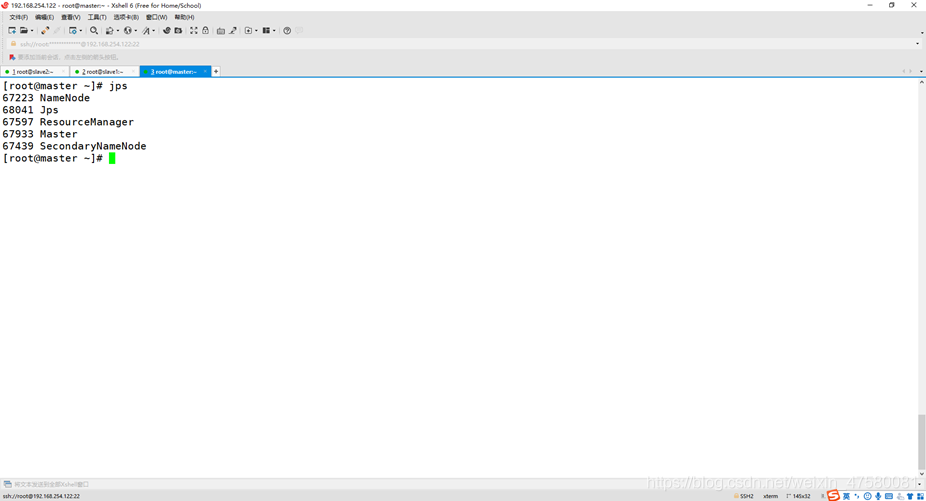

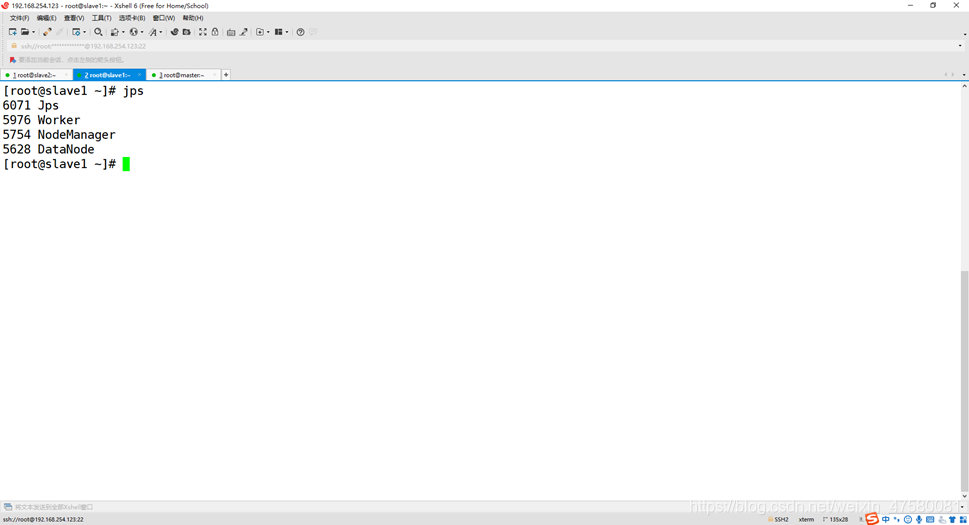

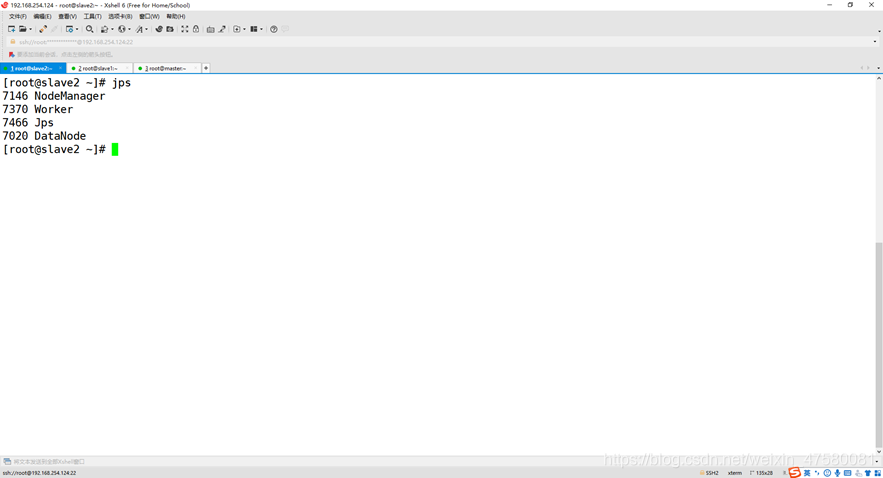

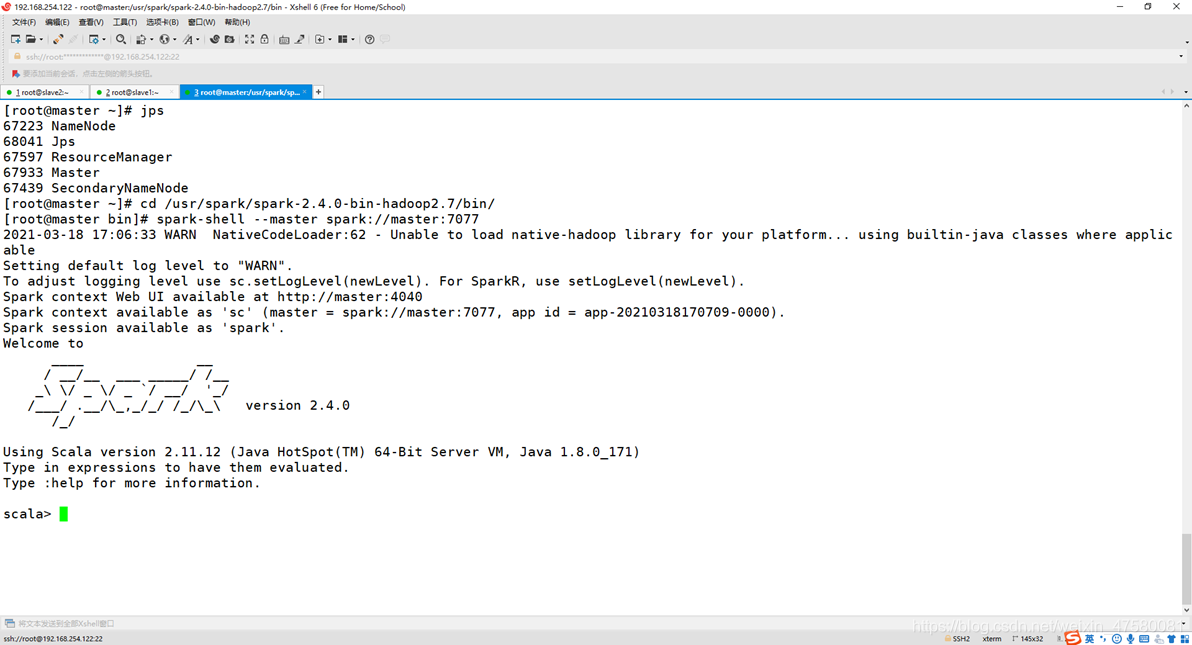

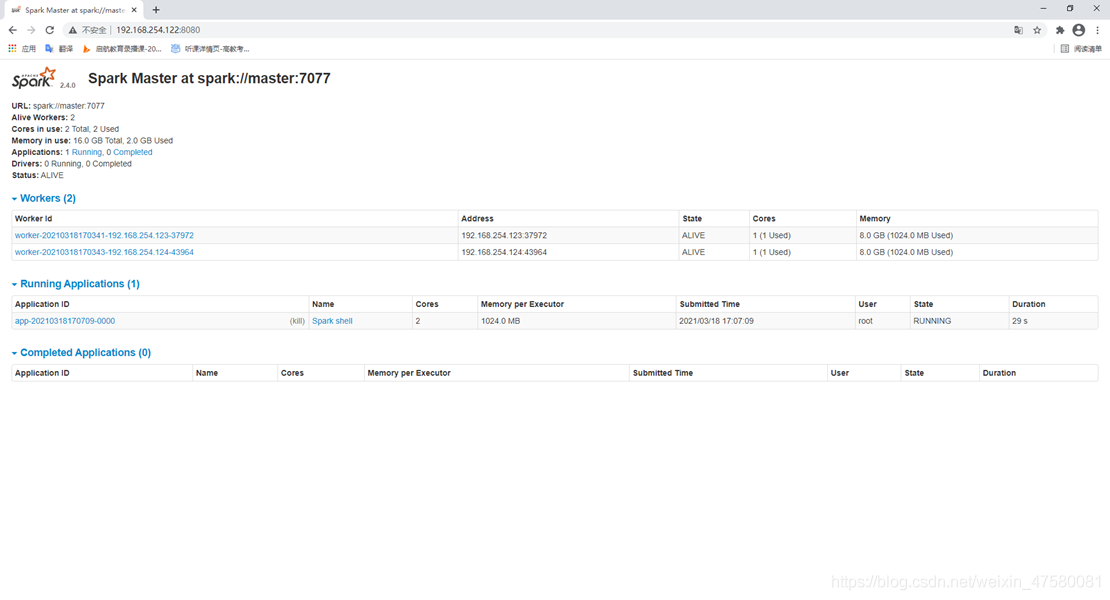

二、启动Hadoop与Spark

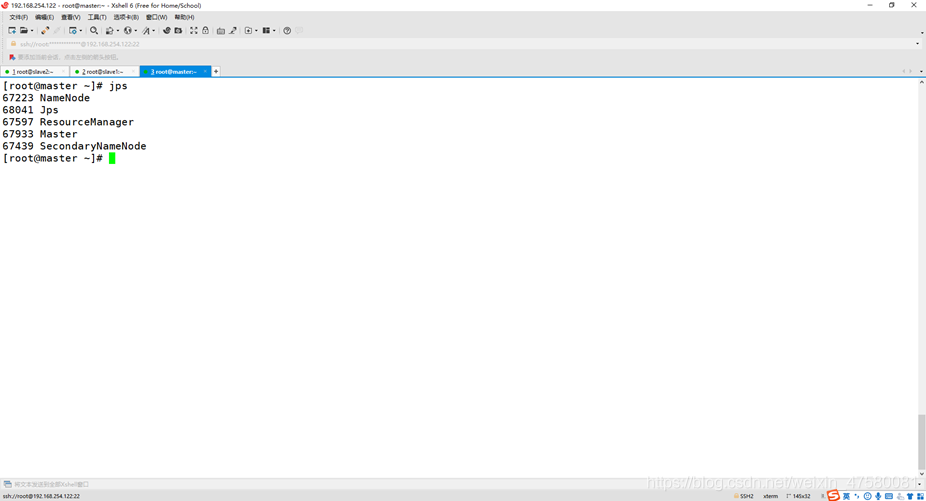

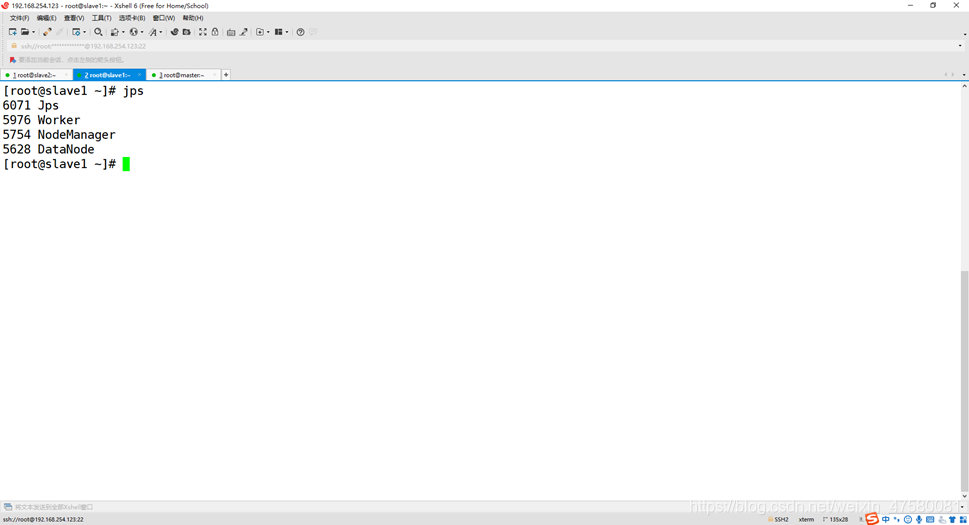

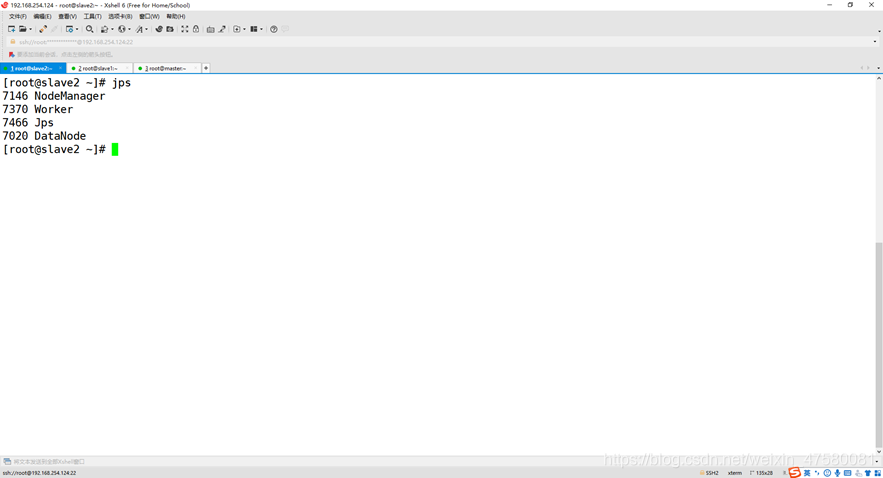

查看3个节点的进程

master

slave1

slave2

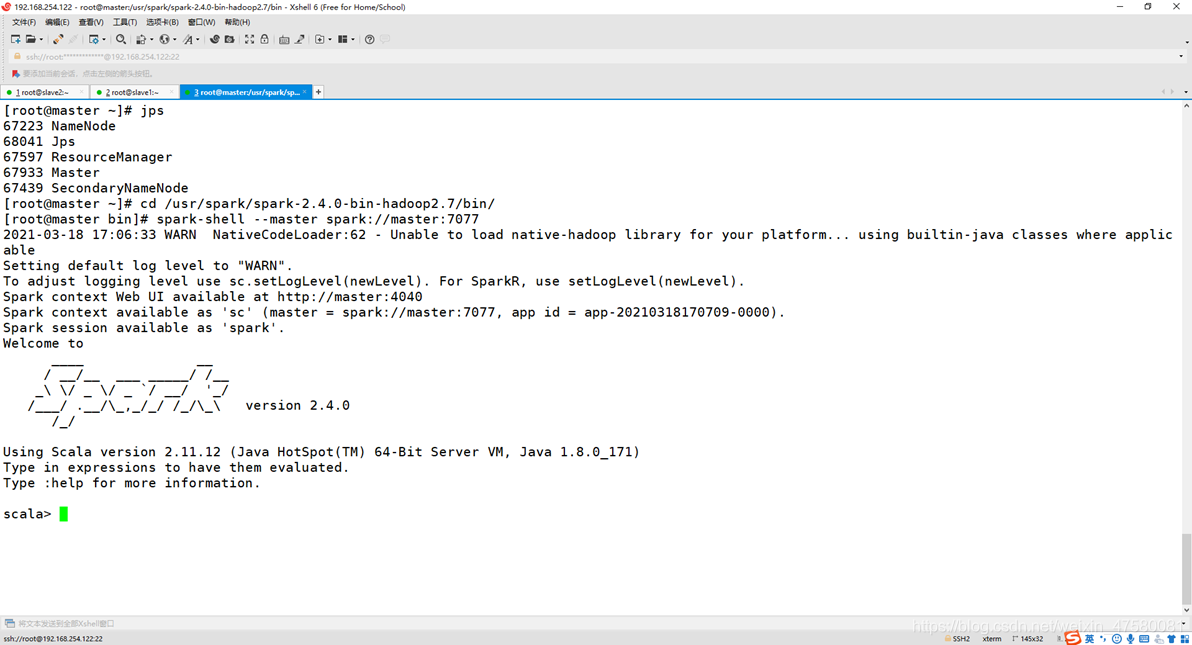

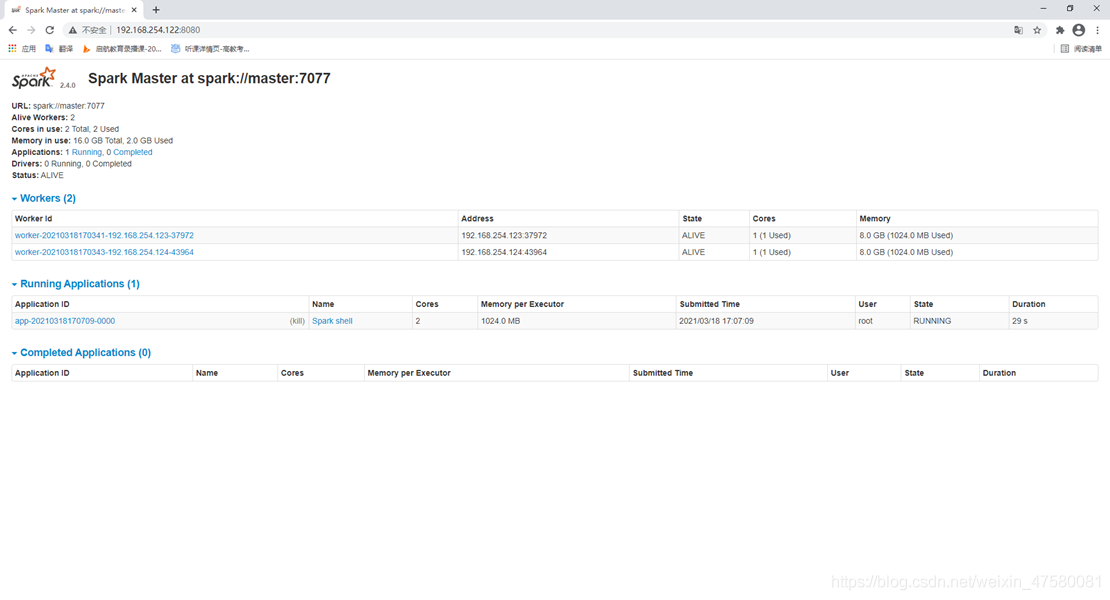

Spark shell命令界面与端口页面

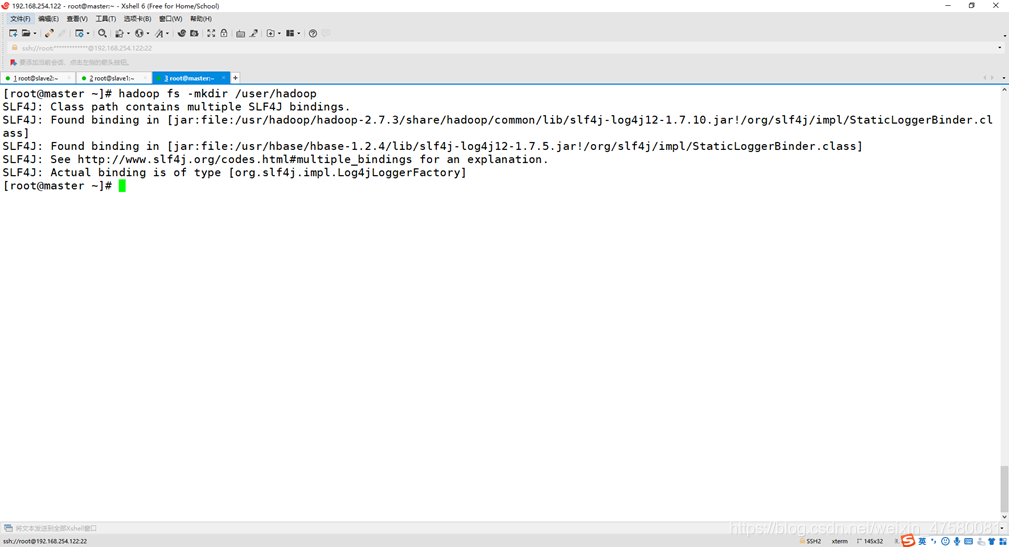

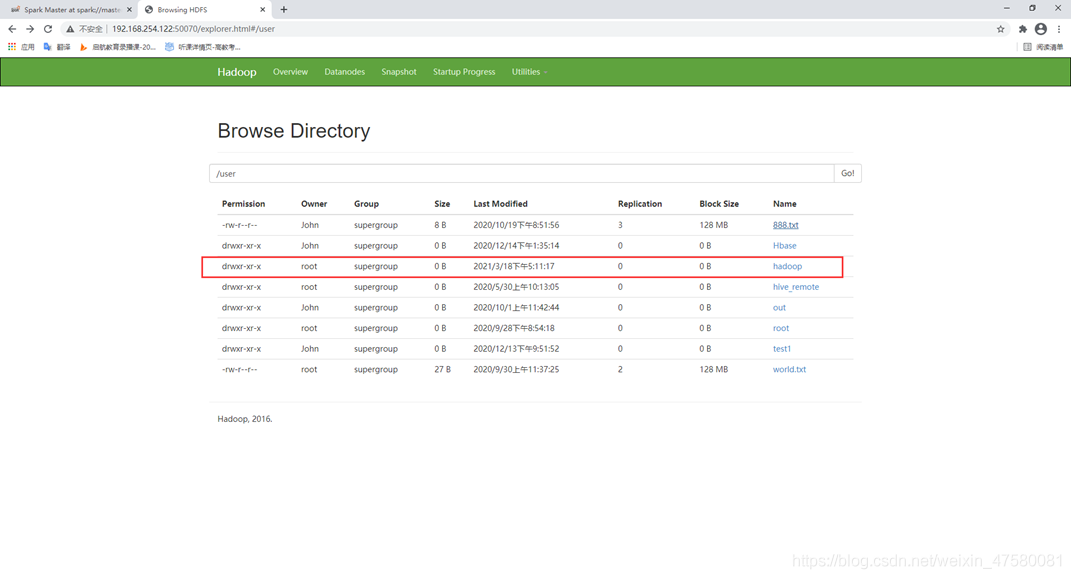

三、HDFS 常用操作

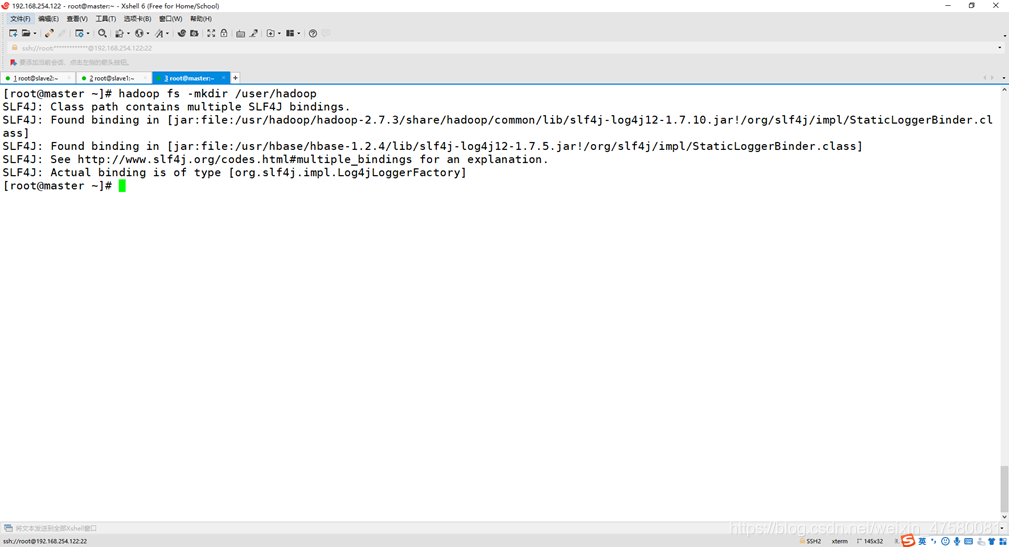

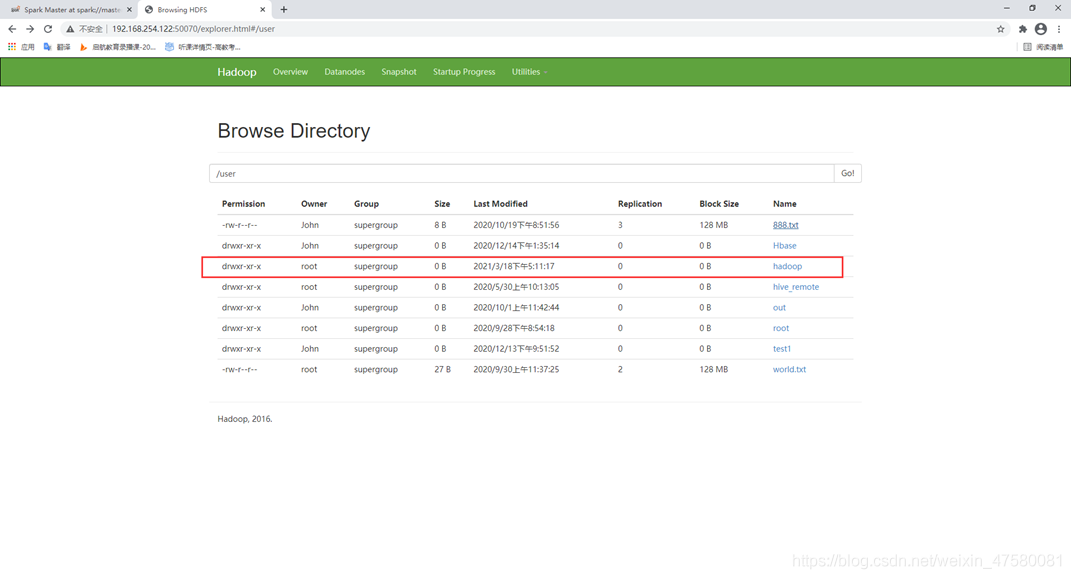

(1) 启动Hadoop,在HDFS 中创建用户目录“/user/hadoop”;

Shell命令:

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

运行截图:

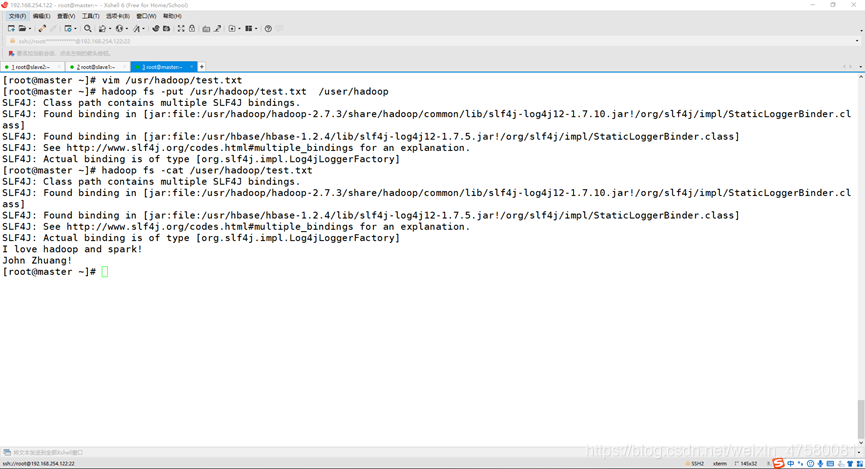

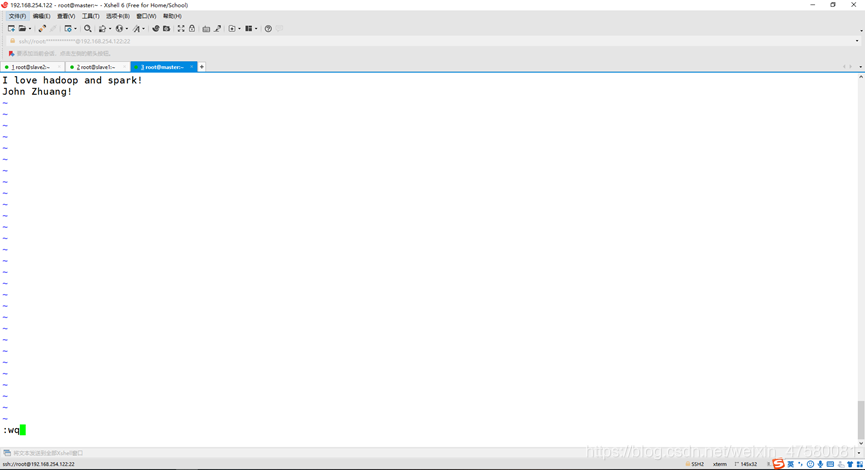

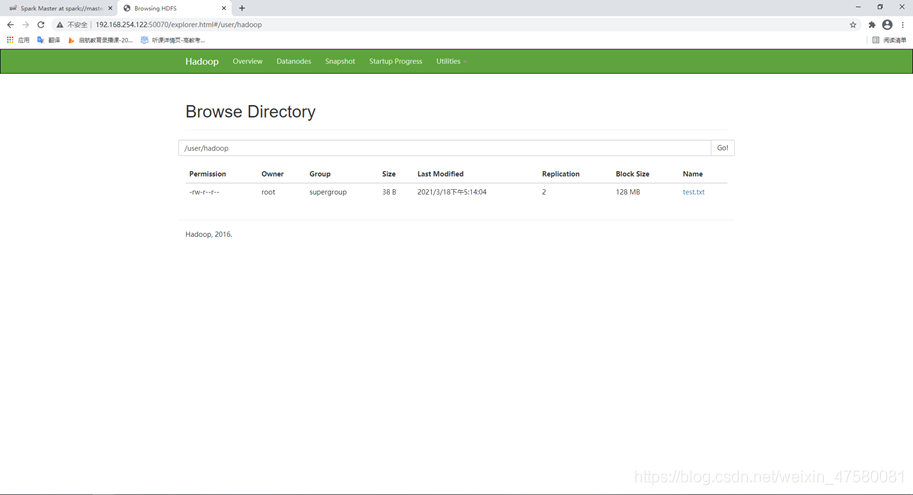

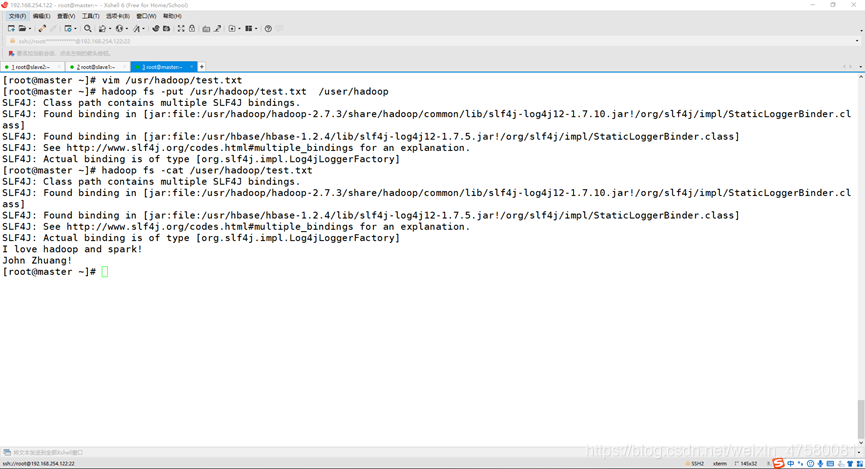

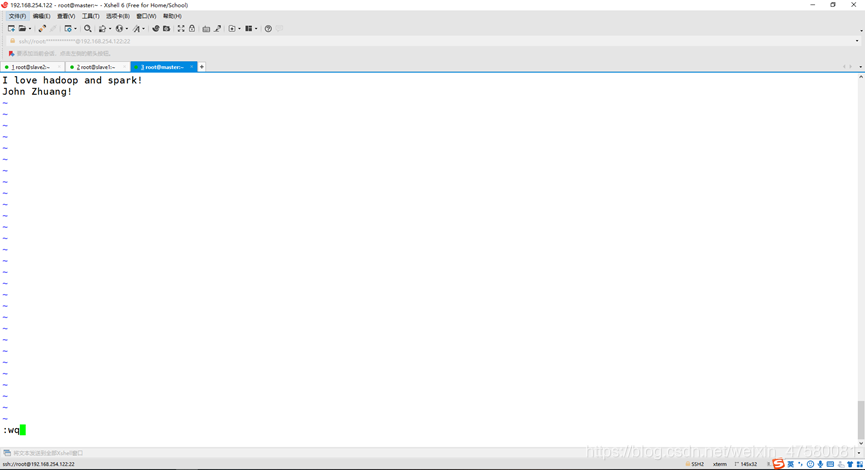

(2) 在Linux 系统的本地文件系统的“/home/hadoop”目录下新建一个文本文件test.txt,并在该文件中随便输入一些内容,然后上传到HDFS 的“/user/hadoop” 目录下;

Shell命令:

[root@master ~]

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

I love hadoop and spark!

John Zhuang!

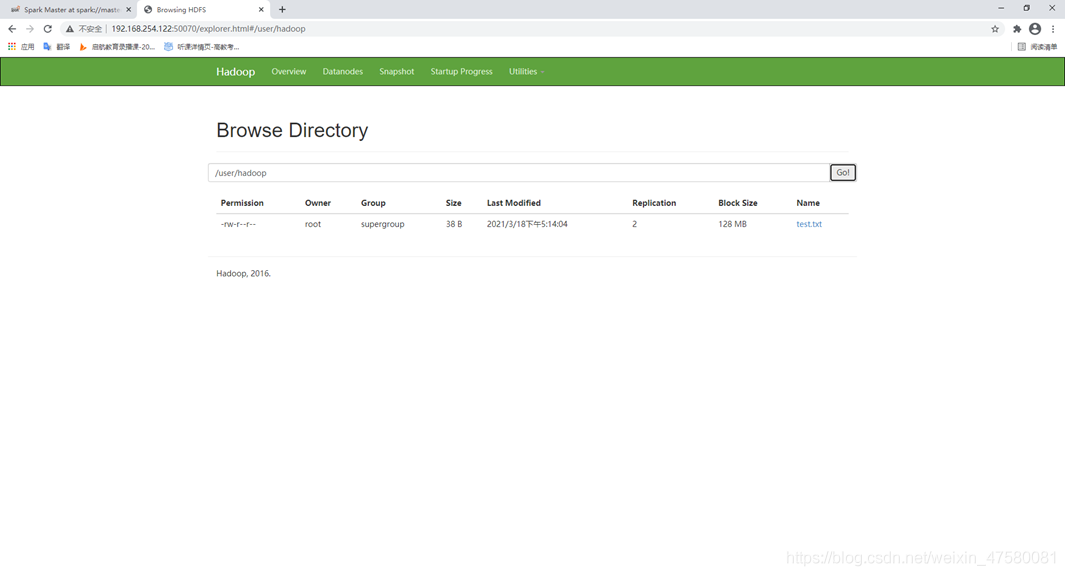

运行截图:

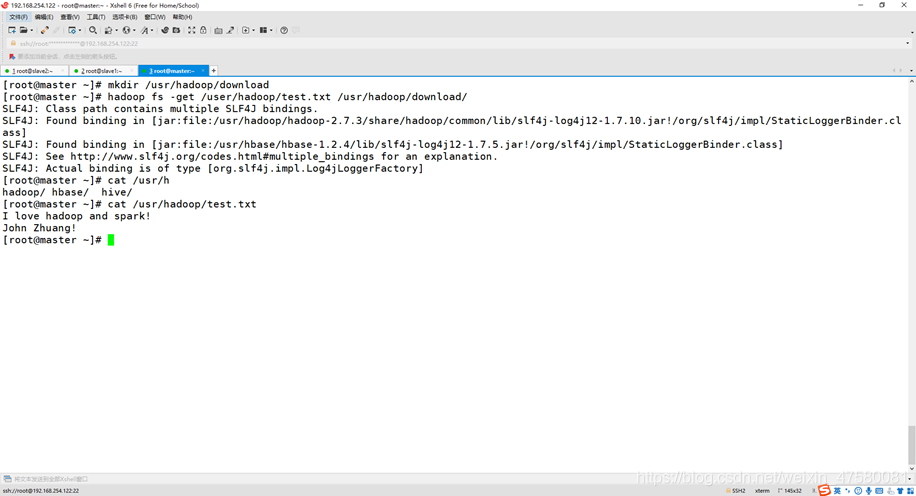

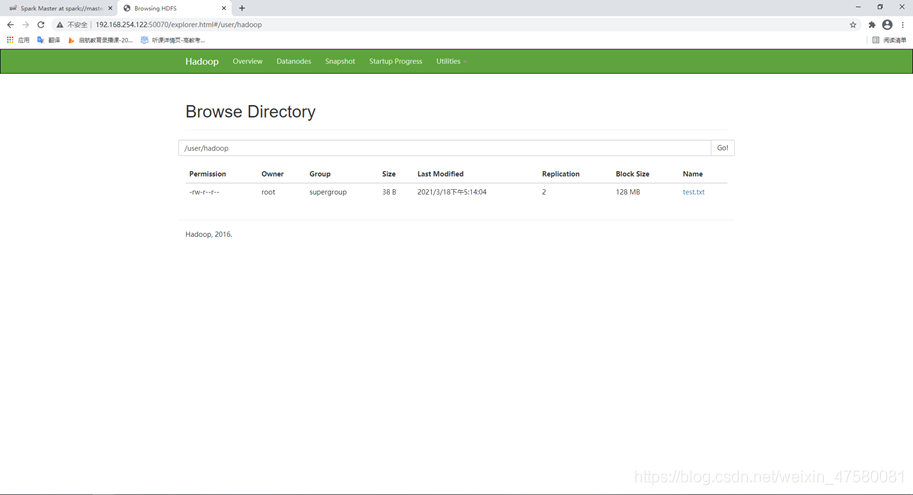

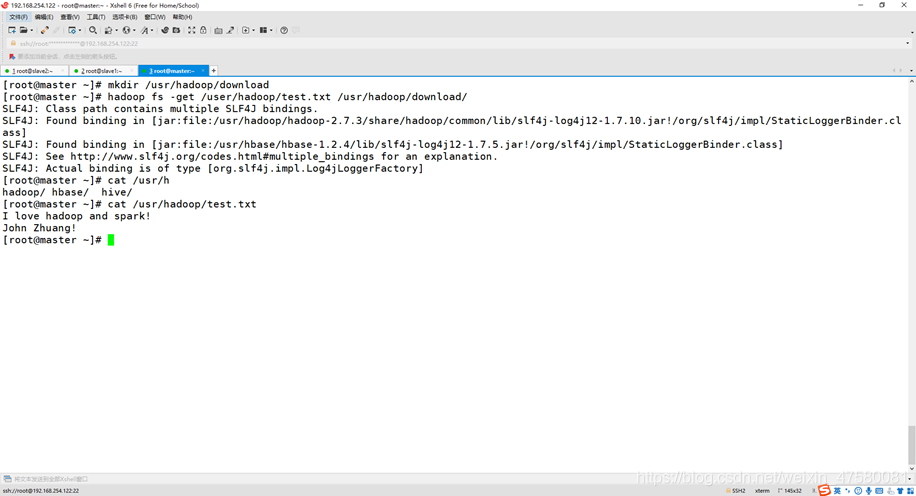

(3) 把HDFS 中“/user/hadoop”目录下的test.txt 文件,下载到Linux 系统的本地文件系统中的“/home/hadoop/下载”目录下;

Shell 命令:

[root@master ~]

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

[root@master ~]

hadoop/ hbase/ hive/

[root@master ~]

I love hadoop and spark!

John Zhuang!

运行截图:

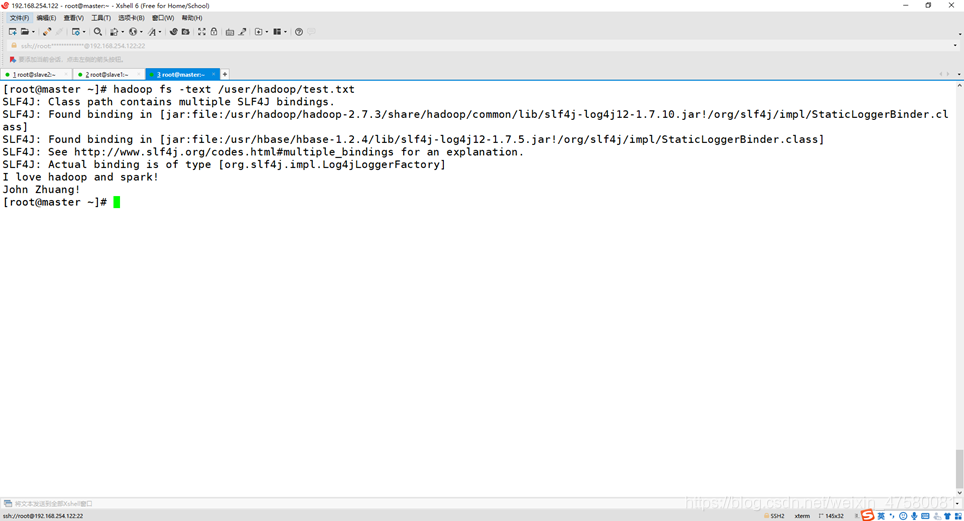

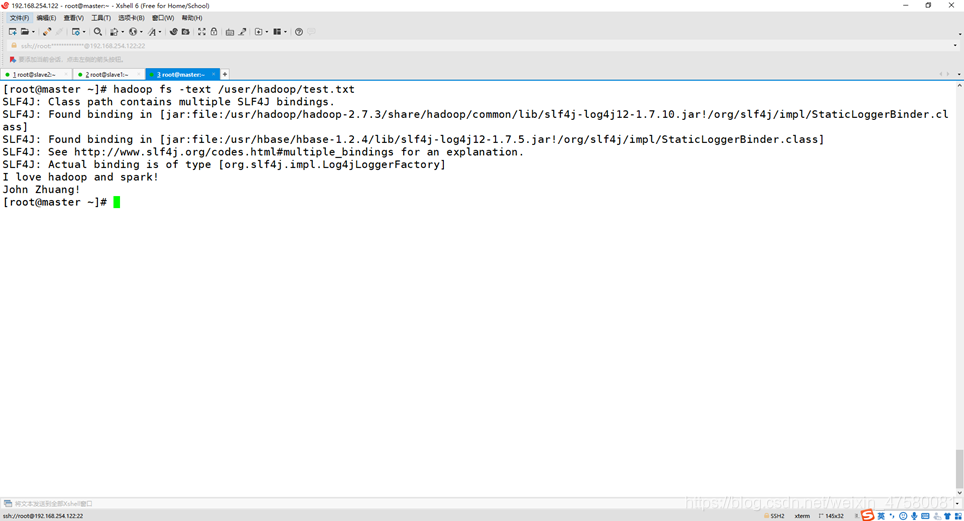

(4)将HDFS中“/user/hadoop”目录下的test.txt文件的内容输出到终端中进行显示;

Shell 命令:

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

I love hadoop and spark!

John Zhuang!

运行截图:

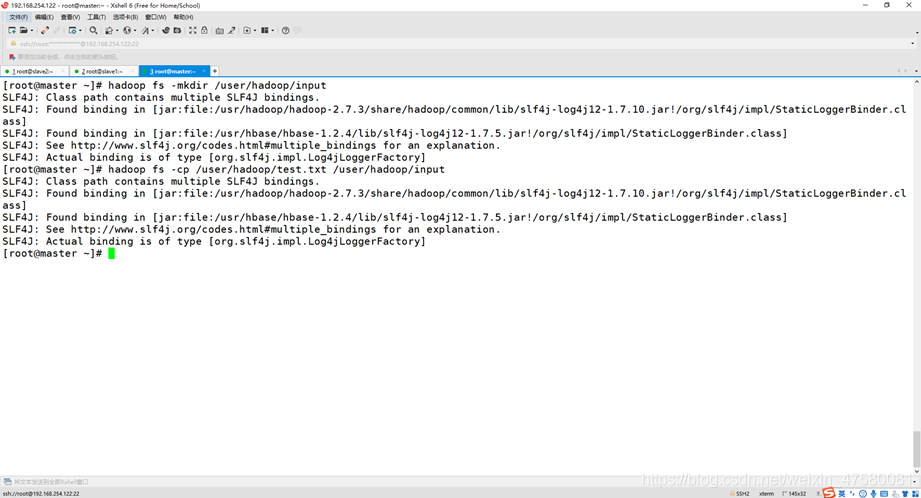

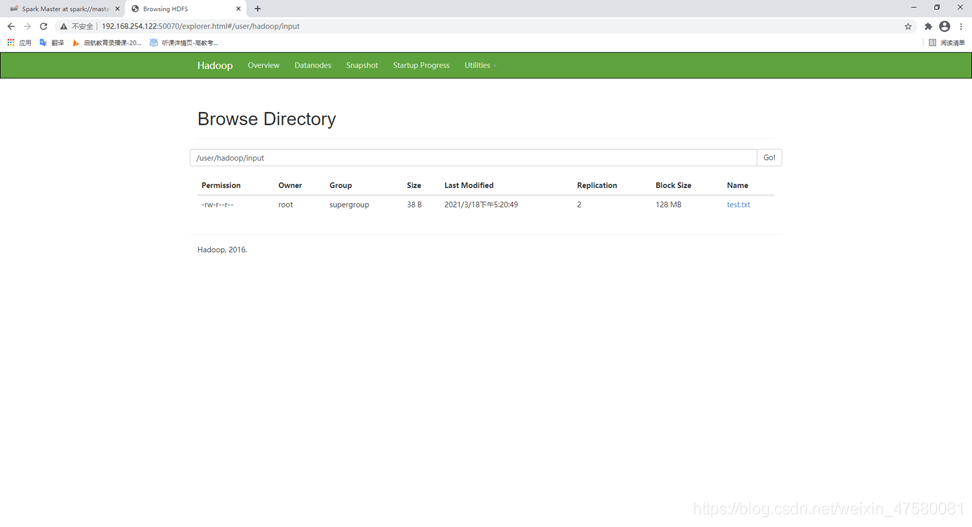

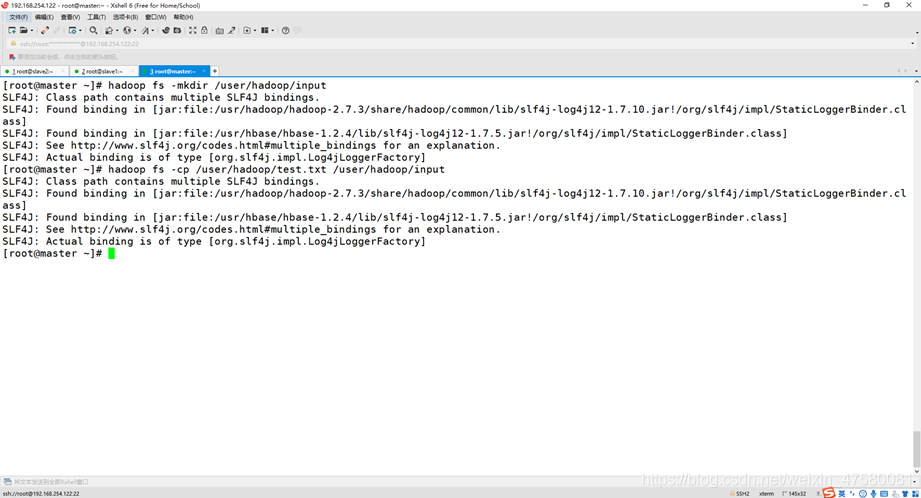

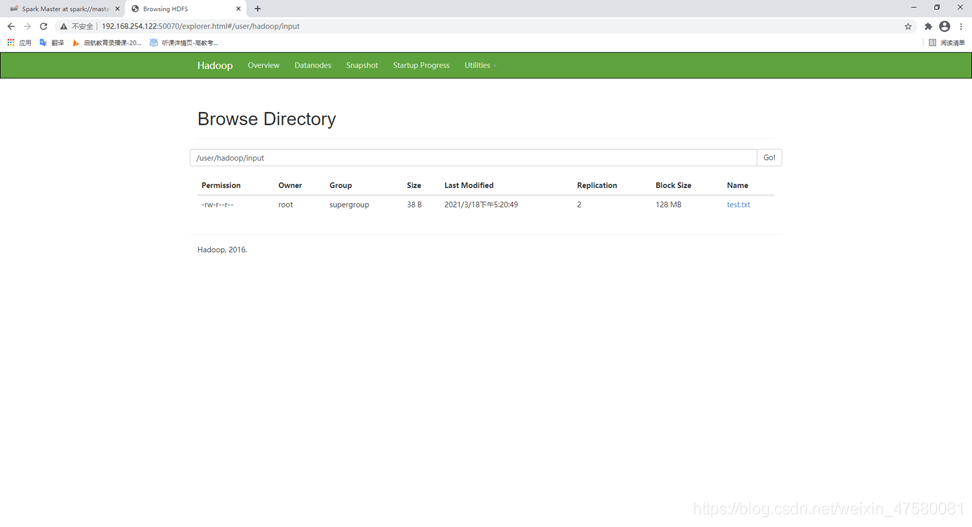

(5)在HDFS 中的“/user/hadoop” 目录下, 创建子目录input ,把HDFS 中“/user/hadoop”目录下的test.txt 文件,复制到“/user/hadoop/input”目录下;

Shell命令:

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

运行截图:

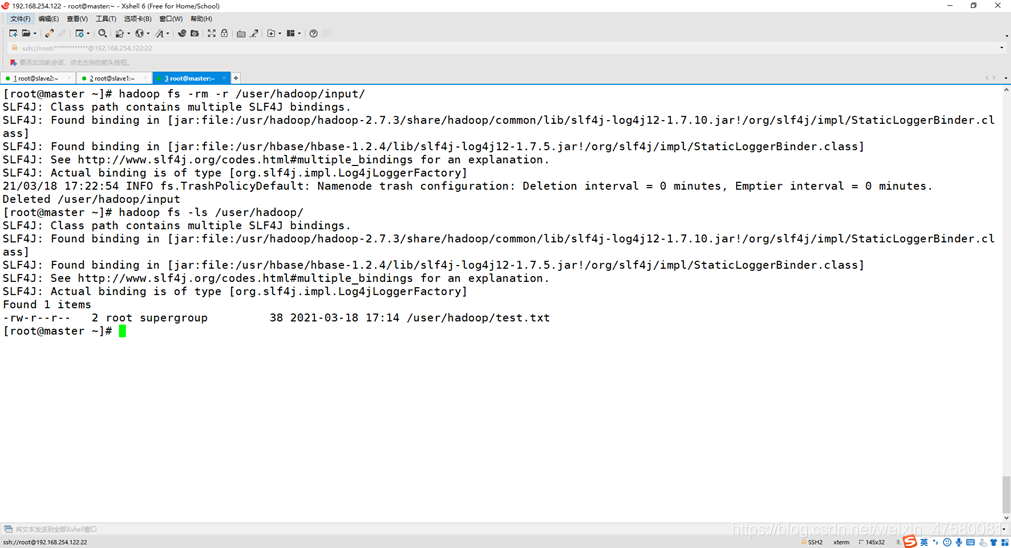

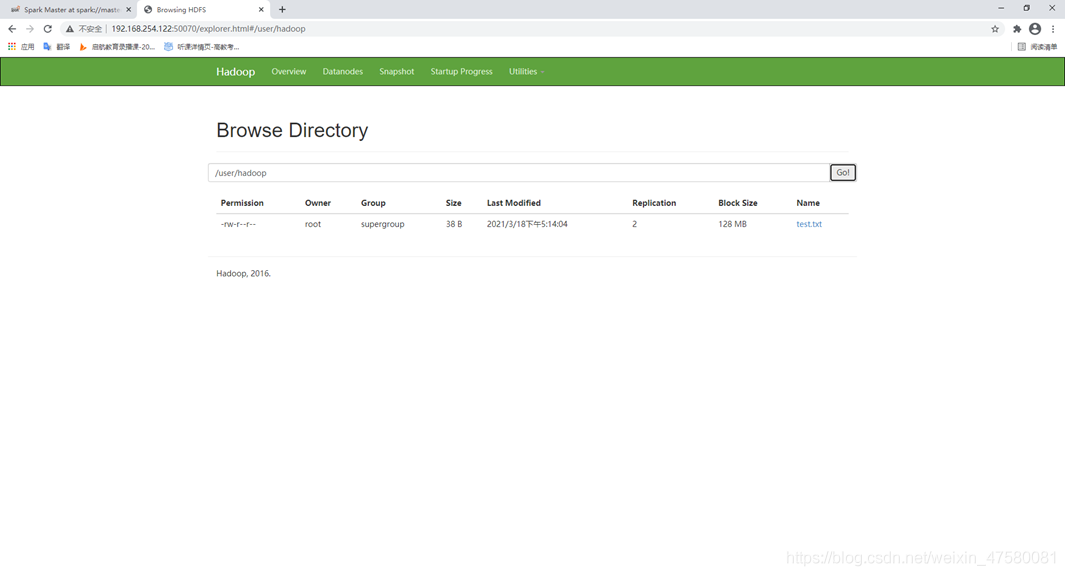

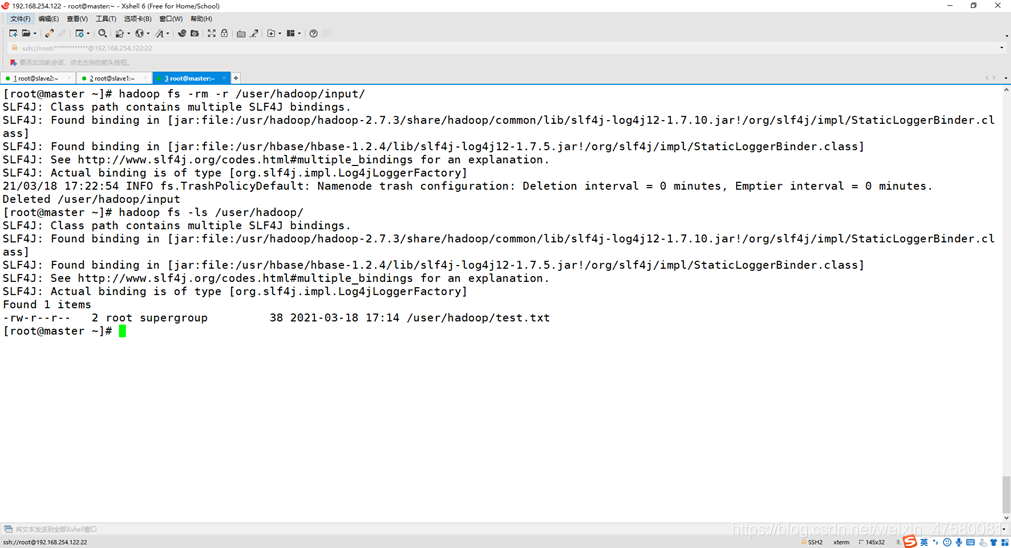

(6)删除HDFS中“/user/hadoop”目录下的test.txt文件,删除HDFS中“/user/hadoop” 目录下的input 子目录及其子目录下的所有内容。

Shell命令:

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

21/03/18 17:22:54 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /user/hadoop/input

[root@master ~]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hbase/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Found 1 items

-rw-r--r-- 2 root supergroup 38 2021-03-18 17:14 /user/hadoop/test.txt

运行截图:

四、Spark 读取文件系统的数据

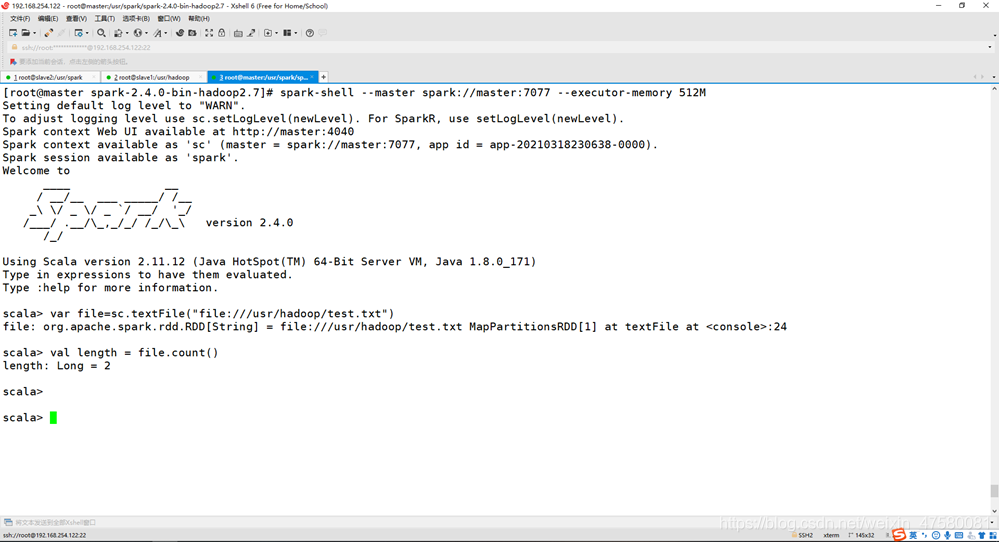

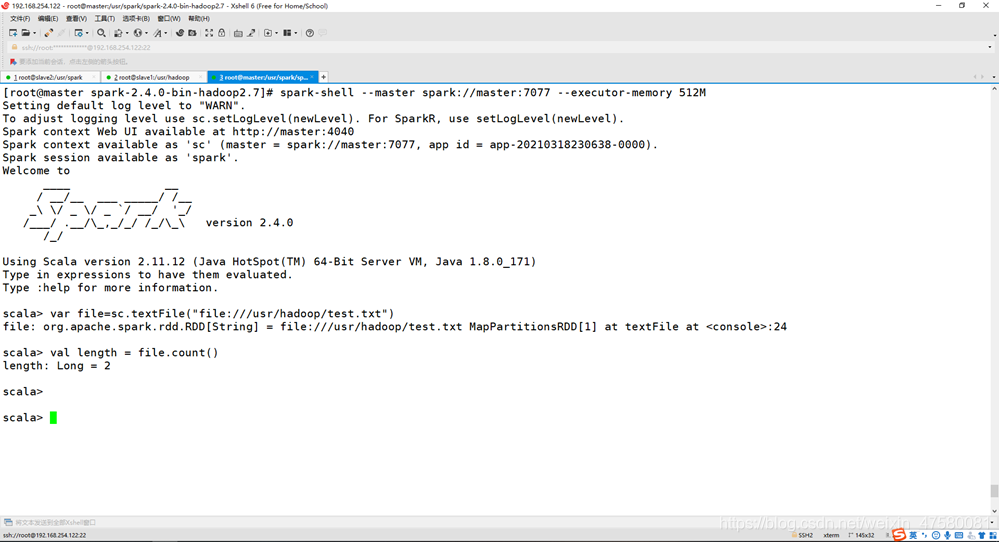

(1)在spark-shell 中读取Linux 系统本地文件“/home/hadoop/test.txt”,然后统计出文件的行数;

Shell命令:

[root@master spark-2.4.0-bin-hadoop2.7]

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://master:4040

Spark context available as 'sc' (master = spark://master:7077, app id = app-20210318230638-0000).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.0

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala> var file=sc.textFile("file:///usr/hadoop/test.txt")

file: org.apache.spark.rdd.RDD[String] = file:///usr/hadoop/test.txt MapPartitionsRDD[1] at textFile at <console>:24

scala> val length = file.count()

length: Long = 2

运行截图:

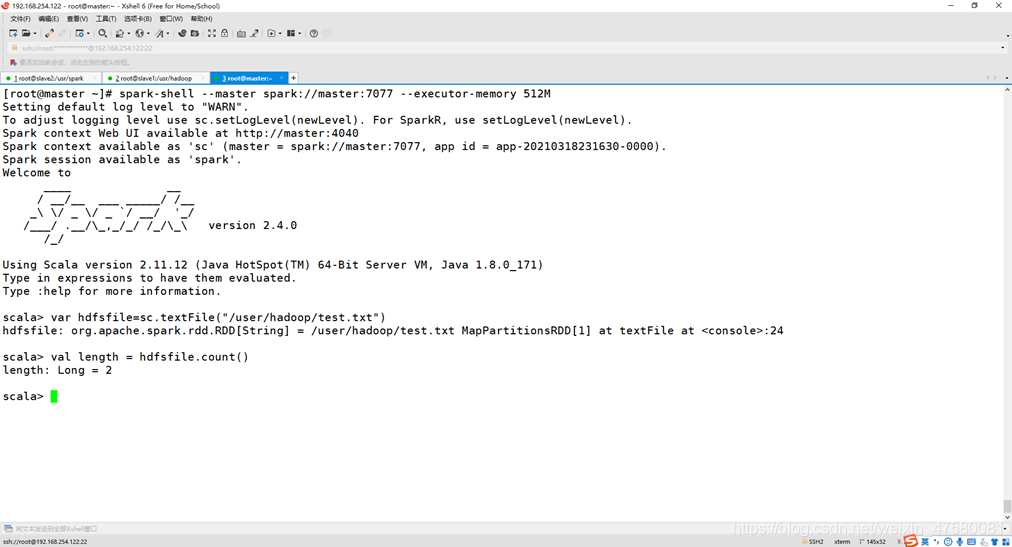

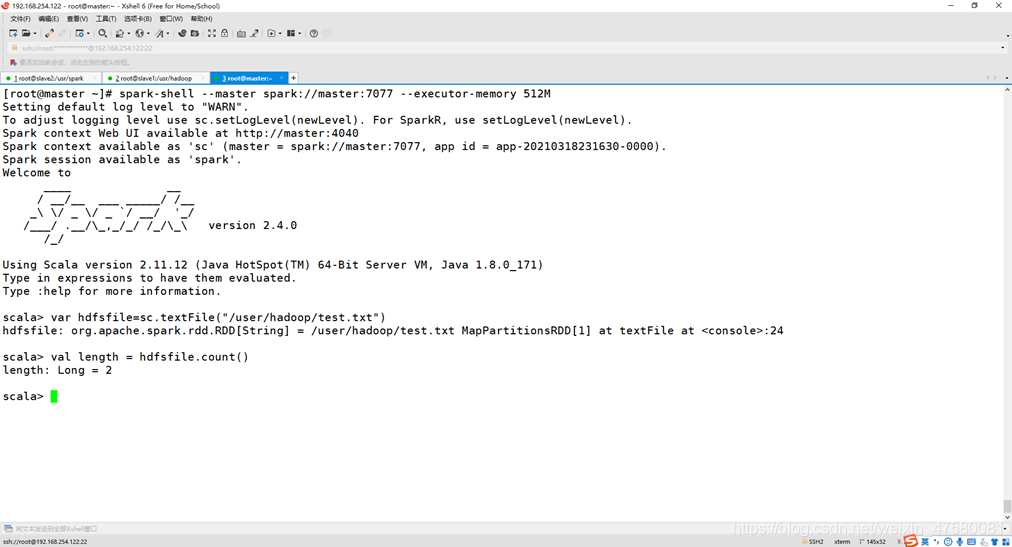

(2)在spark-shell 中读取HDFS 系统文件“/user/hadoop/test.txt”(如果该文件不存在, 请先创建),然后,统计出文件的行数;

Shell命令:

[root@master ~]

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://master:4040

Spark context available as 'sc' (master = spark://master:7077, app id = app-20210318231630-0000).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.0

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_171)

Type in expressions to have them evaluated.

Type :help for more information.

scala> var hdfsfile=sc.textFile("/user/hadoop/test.txt")

hdfsfile: org.apache.spark.rdd.RDD[String] = /user/hadoop/test.txt MapPartitionsRDD[1] at textFile at <console>:24

scala> val length = hdfsfile.count()

length: Long = 2

运行截图:

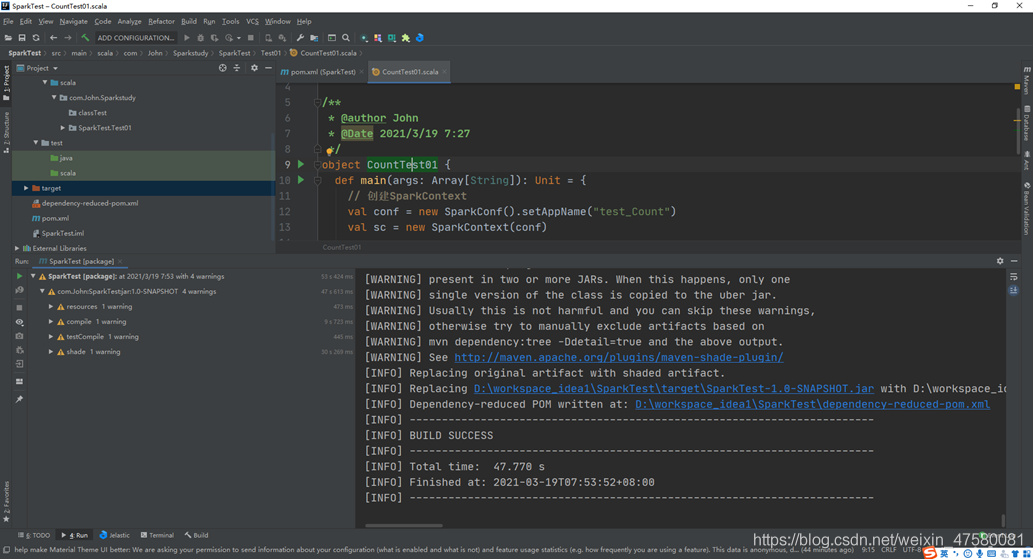

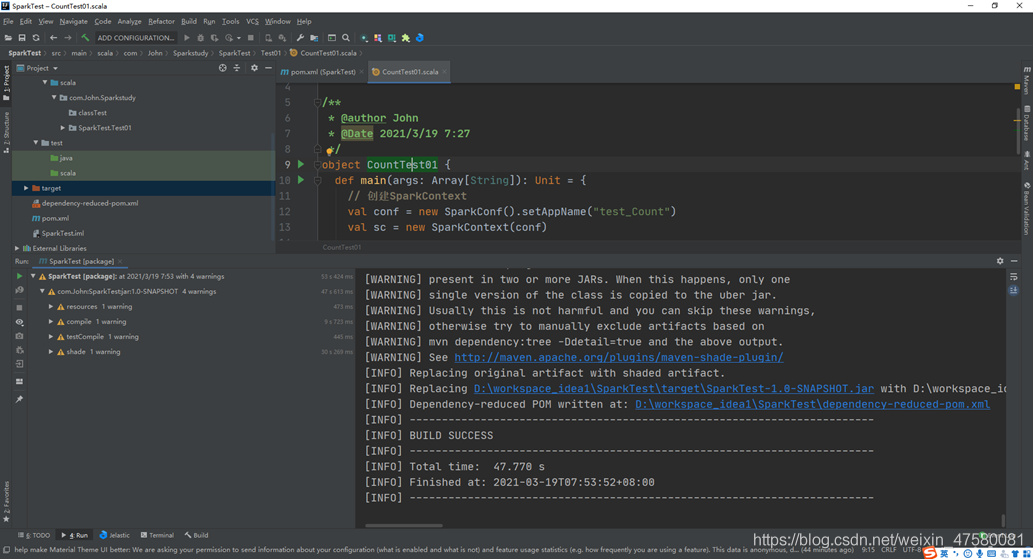

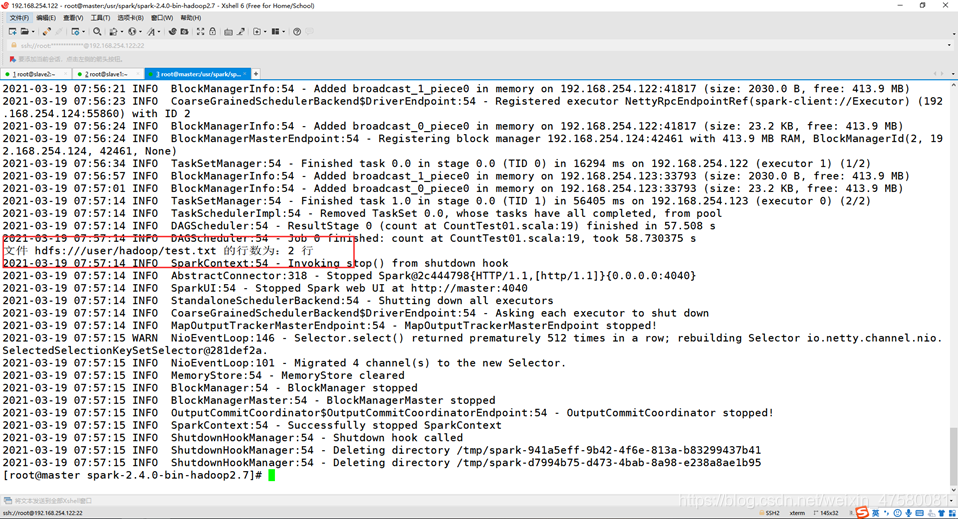

(3)编写独立应用程序,读取HDFS 系统文件“/user/hadoop/test.txt”(如果该文件不存在, 请先创建),然后,统计出文件的行数;通过sbt 工具将整个应用程序编译打包成 JAR 包, 并将生成的JAR 包通过 spark-submit 提交到 Spark 中运行命令。

提示:如果IDEA未构建Spark项目,可以转接到以下的博客:

IDEA使用Maven构建Spark项目:https://blog.csdn.net/weixin_47580081/article/details/115435536

源代码:

package com.John.Sparkstudy.SparkTest.Test01

import org.apache.spark.{SparkConf, SparkContext}

object CountTest01 {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("test_Count")

val sc = new SparkContext(conf)

val file = sc.textFile("hdfs:///user/hadoop/test.txt")

val num = file.count()

println("文件 " + file.name + " 的行数为:" + num + " 行")

}

}

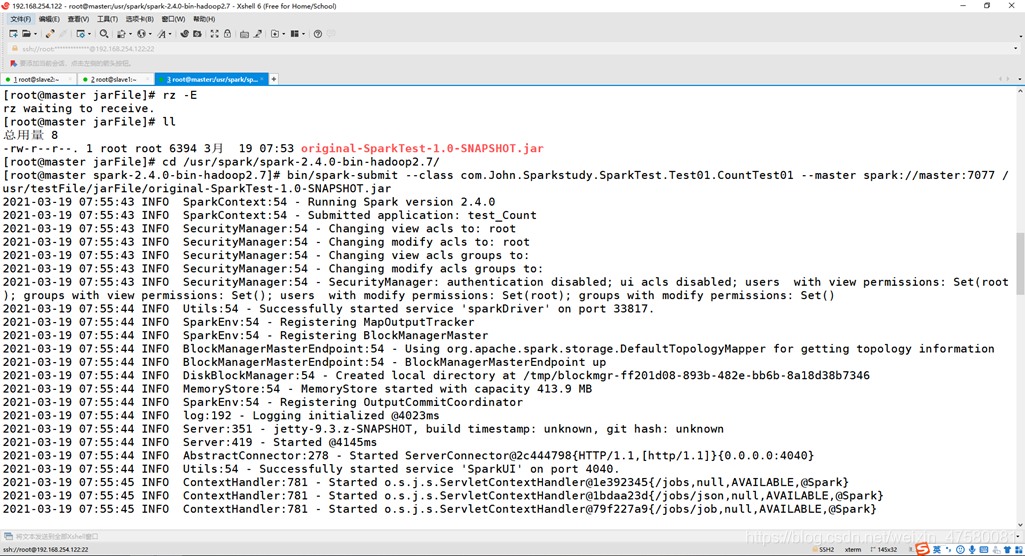

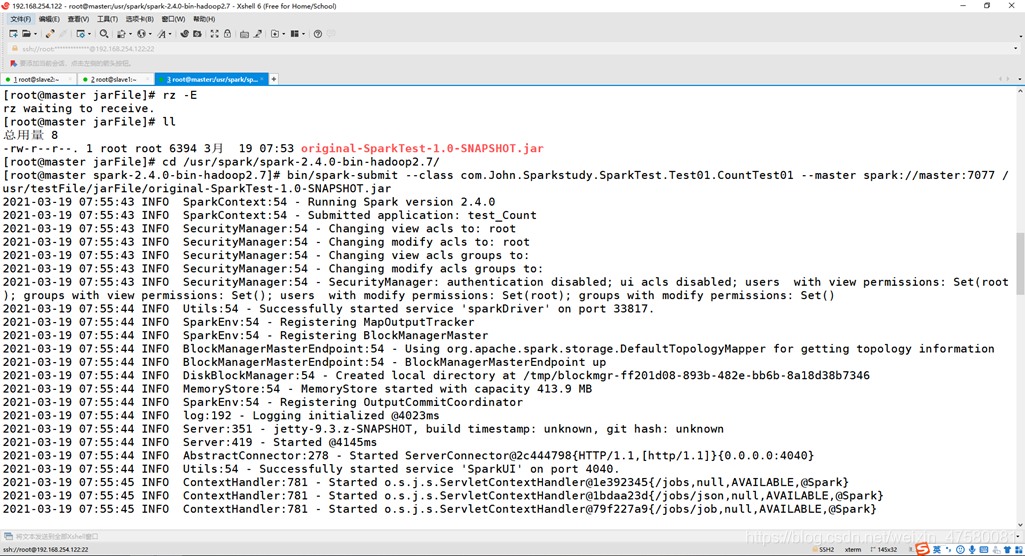

Shell命令:

[root@master jarFile]

rz waiting to receive.

[root@master jarFile]

总用量 8

-rw-r--r--. 1 root root 6394 3月 19 07:53 original-SparkTest-1.0-SNAPSHOT.jar

[root@master jarFile]

[root@master spark-2.4.0-bin-hadoop2.7]

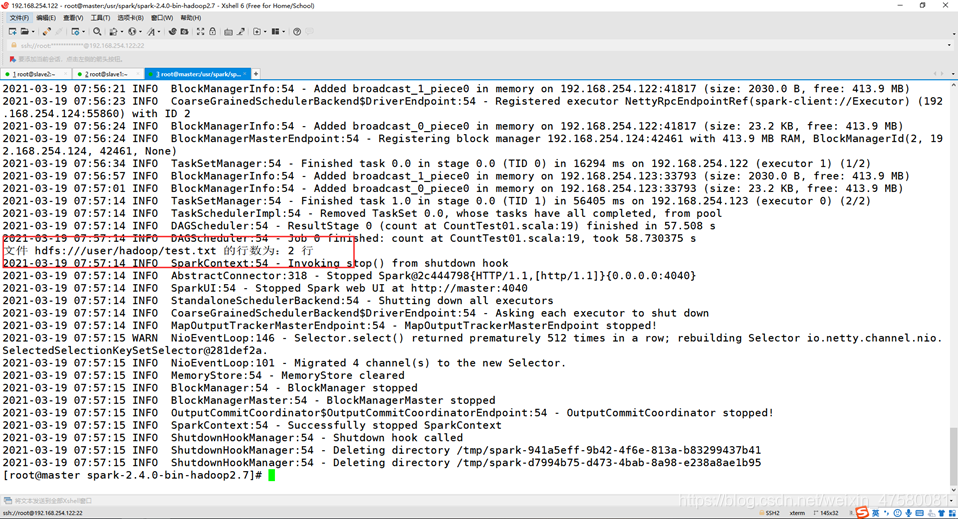

运行截图:

cs